Biography

I am a Ph.D. candidate with 5 years of speech signal processing/sequence-to-sequence (S2S)/deep learning experiences. I have explored speaker recognition in universities and speech recognition in the industry. My research/work interest includes speech/speaker recognition and generative speech AI such as TTS and voice conversion.

Download my resumé .

- Artificial Intelligence

- Speech Signal Processing

- Singing Voice / Speech Synthesis

- Speech Recognition

- Language Processing

-

PhD in Informatics

National Institute of Informatics & SOKENDAI

-

MEng in Electrical Engineer and Information Systems (EEIS), 2020

The University of Tokyo

-

BSc in Measurement and Control Technology and Instruments, 2016

Tianjin University

Featured Publications

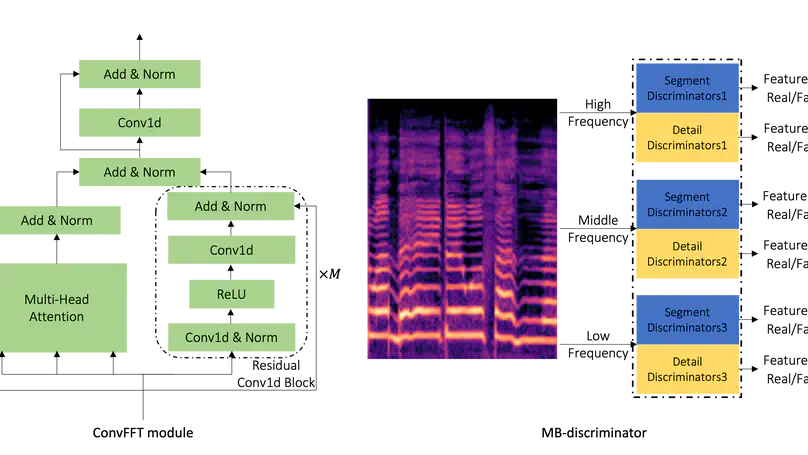

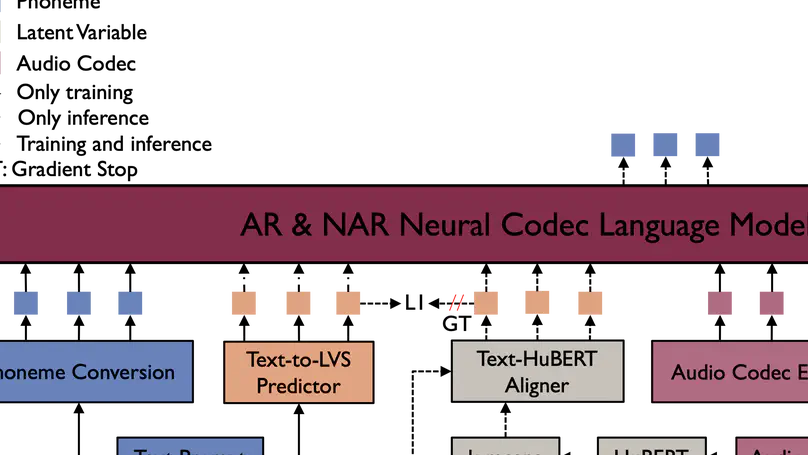

We introduce a novel token-based text-to-speech (TTS) model with 0.8B parameters, trained on a mix of real and synthetic data totaling 650k hours, to address issues like pronunciation accuracy and style consistency. This model integrates a latent variable sequence with enhanced acoustic information into the TTS system, reducing errors and style changes. Our training includes data augmentation for improved timbre consistency, and we use a few-shot voice conversion model to generate diverse voices. This approach enables learning of one-to-many mappings in speech, ensuring both diversity and timbre consistency. Our model outperforms VALL-E in pronunciation, style maintenance, and timbre continuity.

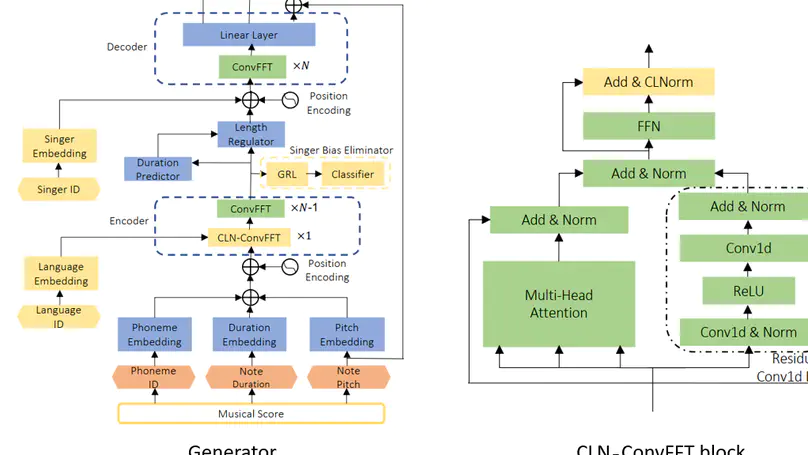

This paper presents CrossSinger, a cross-lingual singing voice synthesizer based on Xiaoicesing2. It tackles the challenge of creating a multi-singer high-fidelity singing voice synthesis system with cross-lingual capabilities using only monolingual singers during training. The system unifies language representation, incorporates language information, and removes singer biases. Experimental results show that CrossSinger can synthesize high-quality songs for different singers in various languages, including code-switching cases.

Publications

Projects

Skills

100%

80%

100%

90%

90%

100%

Experience

Responsibilities include:

- Speech signal processing

- Speech recognition

- Speech synthesis

- Generative AI

Responsibilities include:

- Speech signal processing

- Singing voice synthesis

- Speech synthesis

Responsibilities include:

- Speech signal processing

- Speaker recognition

- Antispoofing

Responsibilities include:

- Speech signal processing

- Speaker recognition

- Speech recognition

- Self-supervised learning

- Spoken term detection

Responsibilities include:

- Speech signal processing

- Speaker recognition