Photo by rawpixel on Unsplash

Photo by rawpixel on Unsplash

The research topics cover text-to-audio (TTA), singing voice synthesis (SVS), music generation, and more multi-modality generative task in the future.

Chang ZENG 曾畅 曾 暢 (ソウ チョウ)

Ph.D. Candidate

My research interests include speech signal processing, speaker recognition, antispoofing, and singing voice synthesis.

Publications

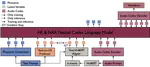

We introduce a novel token-based text-to-speech (TTS) model with 0.8B parameters, trained on a mix of real and synthetic data totaling 650k hours, to address issues like pronunciation accuracy and style consistency. This model integrates a latent variable sequence with enhanced acoustic information into the TTS system, reducing errors and style changes. Our training includes data augmentation for improved timbre consistency, and we use a few-shot voice conversion model to generate diverse voices. This approach enables learning of one-to-many mappings in speech, ensuring both diversity and timbre consistency. Our model outperforms VALL-E in pronunciation, style maintenance, and timbre continuity.

Chunhui Wang,

Chang Zeng,

Bowen Zhang,

Ziyang Ma,

Yefan Zhu,

Zifeng Cai,

Jian Zhao,

Zhonglin Jiang,

Yong Chen

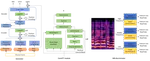

This paper presents XiaoiceSing2, an enhanced singing voice synthesis system that addresses over-smoothing issues in middle- and high-frequency areas of mel-spectrograms. It employs a generative adversarial network (GAN) with improved model architecture to capture finer details.

Chunhui Wang,

Chang Zeng,

Xing He